Subscribe to our free Newsletter

💌 Stay ahead with AI and receive:

✅ Access our Free Community and join 400K+ professionals learning AI

✅ 35% Discount for ChatNode

Welcome to The AI Report!

.png)

WORK WITH US • COMMUNITY • PODCASTS

Friday’s AI Report

• 1. 🛡️ Musk’s xAI Signs EU Safety Chapter

• 2. 🚀 Reinvent compliance from the ground up with CompAI

• 3. 🧑🎓 How AI supports 2 million students a day

• 4. ⚙️ Trending AI tools

• 5. ‼️ Qwen3-Coder Sparks Security Concerns in the West

• 6. 🌎 Google’s AI Maps Climate Change in High Detail

• 7. 📑 Recommended resources

Read Time: 5 minutes

✅ Refer your friends and unlock rewards. Scroll to the bottom to find out more!

Musk’s xAI Signs EU AI Safety Chapter

🚨 Our Report

Elon Musk’s AI startup xAI has agreed to sign the “Safety and Security” chapter of the EU’s AI Code of Practice, a voluntary framework designed to help AI companies align with the upcoming EU AI law. While this move signals a partial endorsement of European AI oversight, xAI criticized other parts of the code as harmful to innovation and overly restrictive.

🔓 Key Points

The EU Code of Practice includes three chapters: transparency, copyright, and safety & security.

xAI is signing exclusively the safety and security section, which targets providers of the most advanced foundation models.

In a public statement, xAI said other sections of the code are “profoundly detrimental to innovation,” specifically calling out the copyright chapter as an overreach.

Other major AI players are split: Google and Microsoft are onboard, while Meta has declined to sign, citing regulatory uncertainty and scope overreach.

🔐 Relevance

xAI’s move shows a selective approach to AI governance: signaling support for safety protocols while resisting broader compliance requirements. As the EU continues positioning itself as the global rulemaker for AI, xAI’s partial alignment could allow it to engage in policy discussions while preserving its product development flexibility. This also highlights diverging strategies among tech giants navigating early-stage global regulation.

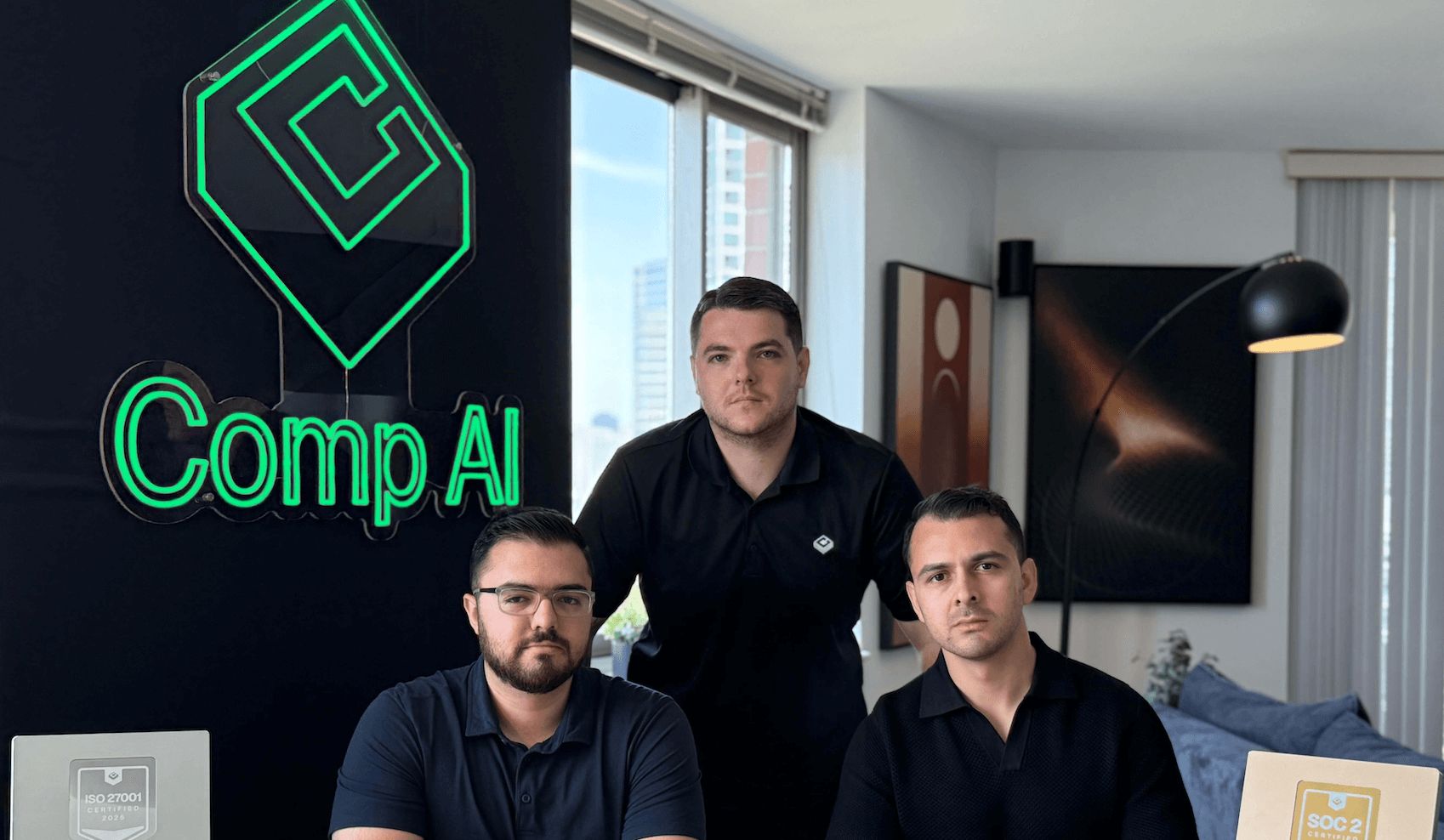

Big news for AI and compliance! You heard it here first: Comp AI has secured $2.5M in pre-seed funding, led by OSS Capital to disrupt the notoriously complex SOC 2 market with an open-source, AI-led approach. They're on track to help 100,000 companies achieve compliance with frameworks like SOC 2 and HIPAA.

Traditional compliance is a recognized pain point. Comp AI is leveraging the latest advances in agentic AI to automate up to 90% of the process, promising to save founders weeks of time and thousands of dollars. They've already helped their customers save over 2,000 hours combined.

This investment will fuel their ambitious expansion plans. Expect to see their open-source platform grow, enabling security professionals to contribute, and the full launch of their AI Agent studio for seamless, automated evidence collection. It's clear: AI is completely reinventing GRC.

How AI supports 2 M students a day

Physics Wallah (PW), one of India’s largest EdTech platforms, built an AI-powered study companion called Gyan Guru to handle the daily influx of student questions at a massive scale.

With over 2 M learners on the platform each day, answering academic doubts manually would be prohibitively expensive and operationally unfeasible.

Gyan Guru uses an RAG + Azure OpenAI architecture to retrieve answers from a vectorized knowledge base of over 10 M solved doubts and 1 M Q&As built by PW.

Students currently get immediate, hyperpersonalized academic and support guidance 24/7, improving concept clarity and boosting competitive exam prep for JEE and NEET aspirants.

Chatnode is for building custom, advanced AI chatbots that enhance customer support and user engagement ⭐️⭐️⭐️⭐️⭐️ (Product Hunt)

Smart Clerk turns bank and credit card statements into fully categorized, accountant-ready financial documents.

Acedit is an AI interview coach that delivers real-time questions, tailored answers, and STAR-based feedback to boost confidence and performance.

Alibaba’s latest AI coding tool, Qwen3-Coder, is raising alarms in the West over potential security risks, despite its open-source label and top-tier capability statements.

Experts warn the tool could introduce undetected vulnerabilities or serve as a “Trojan horse,” especially given China’s national security laws and lack of transparency around backend infrastructure.

With over 300 S&P 500 companies already using AI in development, the rise of foreign-built agentic AI models has sparked intensified calls for regulation, oversight, and better detection tools.

Google’s AlphaEarth Foundations model compresses satellite data into rich, color-coded embeddings to reveal ecosystem changes, land use patterns, and human impact, even in areas like Antarctica and cloud-covered regions of Ecuador.

The model is reportedly 23.9% more accurate than comparable AI tools and can generate insights at a 10-meter resolution, helping governments and companies optimize solar panel placement, crop growth, and infrastructure planning.

Acting as a “virtual satellite” built on DeepMind tech, AlphaEarth is being added to Google Earth Engine and tested with partners like MayBiomas and the Global Ecosystems Atlas to map undermapped rainforests, deserts, and wetlands at scale.

MORE NEWS

PODCASTS

What’s happening in AI right now?

This episode unpacks the latest signals: from GPT-5 rumors to Google and Microsoft’s push into vibe coding to the White House’s AI Action Plan, so you can stop guessing and start understanding what it all means for your work.

We read your emails, comments, and poll replies daily.

Until next time, Martin, Liam, and Amanda.

P.S. Unsubscribe if you don’t want us in your inbox anymore.